This post is part of the lecture notes of my class “Introduction to Online Learning” at Boston University, Fall 2019.

You can find all the lectures I published here.

In this lecture, we will explore a bit more under which conditions we can get better regret upper bounds than . Also, we will obtain this improved guarantees in an automatic way. That is, the algorithm will be adaptive to characteristics of the sequence of loss functions, without having to rely on information about the future.

1. Adaptive Learning Rates for Online Subgradient Descent

Consider the minimization of the linear regret

Using Online Subgradient Descent (OSD), we said that the regret for bounded domains can be upper bounded by

With a fixed learning rate, the learning rate that minimizes this upper bound on the regret is

Unfortunately, as we said, this learning rate cannot be used because it assumes the knowledge of the future rounds. However, we might be lucky and we might try to just approximate it in each round using the knowledge up to time . That is, we might try to use

Observe that , so the first term of the regret would be exactly what we need! For the other term, the optimal learning rate would give us

Now let’s see what we obtain with our approximation.

We need a way to upper bound that sum. The way to treat these sums, as we did in other cases, is to try to approximate them with integrals. So, we can use the following very handy Lemma that generalizes a lot of similar specific ones.

Lemma 1 Let

and

a nonincreasing function. Then

Proof: Denote by .

Summing over , we have the stated bound.

Using this Lemma, we have that

Surprisingly, this term is only a factor of 2 worse than what we would have got from the optimal choice of . However, this learning rate can be computed without knowledge of the future and it can actually be used! Overall, with this choice we get

Note that it is possible improve the constant in front of the bound to by multiplying the learning rates by

. So, putting all together we have the following theorem.

Theorem 2 Let

a closed non-empty convex set with diameter

, i.e.

. Let

an arbitrary sequence of convex functions

subdifferentiable in open sets containing

for

. Pick any

and

. Then,

, the following regret bound holds

The second equality in the theorem clearly show the advantage of this learning rates: We obtain (almost) the same guarantee we would have got knowing the future gradients!

This is an interesting result on its own: it gives a principled way to set the learning rates with an almost optimal guarantee. However, there are also other consequences of this simple regret. First, we will specialize this result to the case that the losses are smooth.

2. Convex Analysis Bits: Smooth functions

We now consider a family of loss functions that have the characteristic of being lower bounded by the squared norm of the subgradient. We will also introduce the concept of dual norms. While dual norms are not strictly needed for this lecture, they give more generality and at the same time they allows me to slowly introduce some of the concepts that will be needed for the lectures on Online Mirror Descent.

Definition 3 The dual norm

of a norm

is defined as

.

Example 1 The dual norm of the L2 norm is the L2 norm. Indeed,

by Cauchy-Schwartz inequality. Also, set

, so

.

If you never saw it before, the concept of dual norm can be a bit weird at first. One way to understand it is that it is a way to measure how “big” are linear functionals. For example, consider the linear function , we want to try to understand how big it is. So, we can measure

that is we measure how big is the output of the linear function compared to its input

, where

is measured with some norm. Now, you can show that the above is equivalent to the dual norm of

.

Remark 1 The definition of dual norm immediately implies

.

Now we can introduce smooth functions, using the dual norms defined above.

Definition 4 Let

differentiable. We say that

is

-smooth w.r.t.

if

for all

.

Keeping in mind the intuition above on dual norms, taking the dual norm of a gradient makes sense if you associate each gradient with the linear functional , that is the one needed to create linear approximation of

in

.

Smooth functions have many properties, for example a smooth function can be upper bounded by a quadratic. However, in the following we will need the following property.

Theorem 5 (e.g. Srebro, N. and Sridharan, K. and Tewari, A., 2010, Lemma 4.1) Let

be

-smooth and bounded from below, then for all

3. bounds

Assume now that the loss functions are bounded from below and

smooth. Without loss of generality, we can assume that each of them is bounded from below by 0. Under these assumptions, from the regret in (2) and Theorem 5 we immediately obtain

This is an implicit bound, in the sense that appears on both sides of the inequality. To makes it explicit, we will use the following simple Lemma (proof left as an exercise).

So, we have the following theorem

Theorem 7 Let

a closed non-empty convex set with diameter

, i.e.

. Let

an arbitrary sequence of non-negative convex functions

![{\ell_t:{\mathbb R}^d \rightarrow (-\infty, +\infty]}](https://s0.wp.com/latex.php?latex=%7B%5Cell_t%3A%7B%5Cmathbb+R%7D%5Ed+%5Crightarrow+%28-%5Cinfty%2C+%2B%5Cinfty%5D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

-smooth in open sets containing

for

. Pick any

and

. Then,

, the following regret bound holds

This regret guarantee is very interesting because in the worst case it is still of the order , but in the best case scenario it becomes a constant! In fact, if there exists a

such that

we get a constant regret. Basically, if the losses are “easy”, the algorithm adapts to this situation and gives us a better regret.

These kind of guarantees are called bounds because they depend on the cumulative competitor loss that can be denoted by

.

4. AdaGrad

We now present another application of the regret bound in (2). AdaGrad, that stands for Adaptive Gradient, is an Online Convex Optimization algorithm proposed independentely by (H. B. McMahan and M. J. Streeter, 2010) and (J. Duchi and E. Hazan and Y. Singer, 2010). It aims at being adaptive to the sequence of gradients. It is usually known as a stochastic optimization algorithm, but in reality it was proposed for Online Convex Optimization (OCO). To use it as a stochastic algorithm, you should use an online-to-batch conversion, otherwise you do not have any guarantee of convergence.

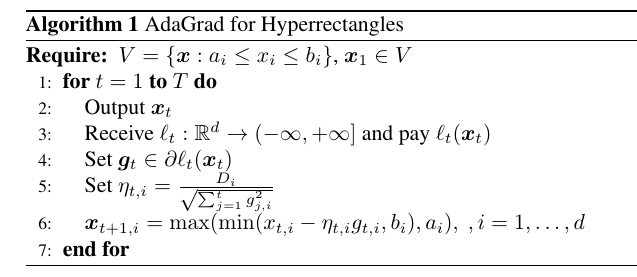

We will present a proof that only allows hyperrectangles as feasible sets , on the other hand the restriction makes the proof almost trivial. Let’s see how it works.

AdaGrad has key ingredients:

- A coordinate-wise learning process;

- The adaptive learning rates in (1).

For the first ingredient, as we said, the regret of any OCO problem can be upper bounded by the regret of the Online Linear Optimization (OLO) problem. That is,

Now, the essential observation is to explicitely write the inner product as a sum of product over the single coordinates:

where we denoted by the regret of the 1-dimensional OLO problem over coordinate

, that is

. In words, we can decompose the original regret as the sum of

OLO regret minimization problems and we can try to focus on each one of them separately.

A good candidate for the 1-dimensional problems is OSD with the learning rates in (1). We can specialize the regret in (2) to the 1-dimensional case for linear losses, so we get for each coordinate

This choice gives us the AdaGrad algorithm in Algorithm 1.

Putting all together, we have immediately the following regret guarantee.

Theorem 8 Let

with diameters along each coordinate equal to

. Let

an arbitrary sequence of convex functions

subdifferentiable in open sets containing

for

. Pick any

and

. Then,

, the following regret bound holds

Is this a better regret bound compared to the one in Theorem 2? It depends! To compare the two we have to consider that is a hyperrectangle because the analysis of AdaGrad above only works for hyperrectangle. Then, we have to compare

From Cauchy-Schwarz, we have that . So, assuming the same sequence of subgradients, AdaGrad has always a better regret on hyperrectangles. Also, note that

So, in the case that is a hypercube we have

and

, the bound of AdaGrad is between

and

times the bound of Theorem 2. In other words, if we are lucky with the subgradients, the particular shape of the domain might save us a factor of

in the guarantee.

Note that the more general analysis of AdaGrad allows to consider arbitrary domains, but it does not change the general message that the best domains for AdaGrad are hypercubes. We will explore this issue of choosing the online algorithm based on the shape of the feasible set when we will introduce Online Mirror Descent.

Hidden in the guarantee is that the biggest advantage of AdaGrad is the property of being coordinate-wise scale-free. That is, if each coordinate of the gradients are multiplied by different constants, the learning rate will automatically scale them back. This is hidden by the fact that the optimal solution would also scale accordingly, but the fixed diameters of the feasible set hide it. This might be useful in the case the ranges of coordinates of the gradients are vastly different one from the other. Indeed, this does happen in the stochastic optimization of deep neural networks, where the first layers have smaller magnitude of the gradients compared to the last layers.

5. History Bits

The adaptive learning rate in (1) first appeared in (M. Streeter and H. B. McMahan, 2010). However, similar methods were used long time before. Indeed, the key observation to approximate oracle quantities with estimates up to time was first proposed in the self-confident algorithms (P. Auer and N. Cesa-Bianchi and C. Gentile, 2002), where the learning rate is inversely proportional to the square root of the cumulative loss of the algorithm, and for smooth losses it implies the

bounds similar to the one in Theorem 7.

AdaGrad was proposed in basically identically form independently by two groups at the same conference: (H. B. McMahan and M. J. Streeter, 2010) and (J. Duchi and E. Hazan and Y. Singer, 2010). The analysis presented here is the one in (M. Streeter and H. B. McMahan, 2010) that does not handle generic feasible sets and does not support “full-matrices”, i.e. full-matrix learning rates instead of diagonal ones. However, in machine learning applications AdaGrad is rarely used with a projection step (even if doing so provably destroys the worst-case performance (F. Orabona and D. Pál, 2018)). Also, in the adversarial setting full-matrices do not seem to offer advantages in terms of regret compared to diagonal ones.

Note that the AdaGrad learning rate is usually written as

where is a small constant used to prevent division by zero. In reality,

is not necessary and removing it makes the updates scale-free, as stressed in (F. Orabona and D. Pál, 2018).

AdaGrad inspired an incredible number of clones, most of them with similar, worse, or no regret guarantees. The keyword “adaptive” itself has shifted its meaning over time. It used to denote the ability of the algorithm to obtain the same guarantee as it knew in advance a particular property of the data (i.e. adaptive to the gradients/noise/scale = (almost) same performance as it knew the gradients/noise/scale in advance). Indeed, in Statistics this keyword is used with the same meaning. However, “adaptive” has changed its meaning over time. Nowadays, it seems to denote any kind of coordinate-wise learning rates that does not guarantee anything in particular.

6. Exercises

Exercise 1 Prove that the dual norm of

is

, where

and

.

Exercise 2 Show that using online subgradient descent on a bounded domain with the learning rates

with Lipschitz, smooth, and strongly convex functions you can get

bounds.

Exercise 3 Prove that the logistic loss

, where

and

is

-smooth w.r.t.

.

Hi, nice post!

A few comments:

In theorem 8 I think there’s a typo in the definition of $\eta_{t, i}$: in the denominator the sum should be $\sum_{j=1}^t g_{j, i}^2$ as in Algorithm 1, right?

Also, I’m not totally clear about the comparison between Adagrad and OSD. When you say “It is immediate to see..”, why the first term is smaller or equal than the third term?

Based on my reasoning, if we apply Cauchy-Schwartz we get

$$ \sum_{i=1}^d \sqrt{ \sum_{t=1}^T g_{t, i}^2} \leq \sqrt{d} \sqrt{ \sum_{t=1}^T \| g_t \|_2^2 } $$

and then we can multiply both terms by $ D_\infty $. This would be fine if $ D = \sqrt{d} D_\infty $, but that should hold with inequality, i.e. $ D \leq \sqrt{d} D_\infty $. Indeed, assuming we use the definition of D given in lecture 2, $ D = \max_{x, y \in V} \| x – y \| = \| b – a \| = \sqrt{ \sum_{i=1}^d D_i^2} \leq D_\infty \sqrt{d} $. What am I missing?

LikeLike

Thanks for the finding the typo, I fixed it.

Also, there was some confusion in the text regarding hyperrectangles and hypercubes. I fixed it and made the comparison clearer: It is always better on hyperrectangles and on hypercubes you can might save a factor sqrt{d}. I hope it is better/correct now.

LikeLike

Any hint on how to solve Exercise 2? I followed a similar derivation used for the L* bounds in the notes, but cannot see how we can use the strong convexity to achieve a logarithmic bound

LikeLike

Hi Anirudh, first let me say that the learning rate in (1) won’t work as it is. You need a hybrid learning rate between the one for strongly convex functions and the one in (1). Start from the one for strongly convex functions and try to make it data-dependent.

LikeLike

Thanks for the reply! Will try it as you suggested

LikeLike

It seems there was an error in the text of exercise 2, sorry! I have now fixed it…

LikeLike

Thankss great blog post

LikeLiked by 1 person